ManifoldCF- End-user Documentation

-

Overview

- Defining Output Connections

- Defining Transformation Connections

- Defining Authority Groups

- Defining Repository Connections

- Defining Notification Connections

- Defining User Mapping Connections

- Defining Authority Connections

- Creating Jobs

- Executing Jobs

- Status Reports

- History Reports

- A Note About Credentials

- Output Connection Types

- Transformation Connection Types

- User Mapping Connection Types

-

Authority Connection Types

- Active Directory Authority Connection

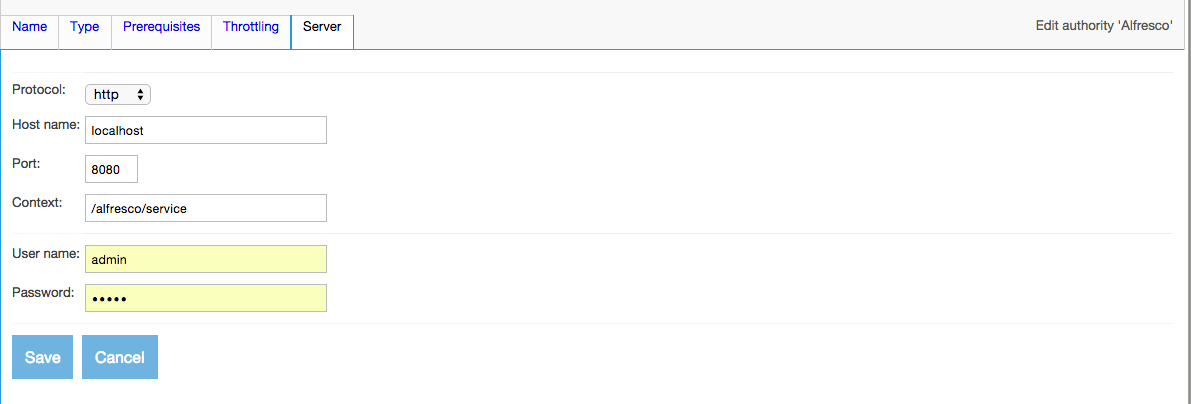

- Alfresco Webscript Authority Connection

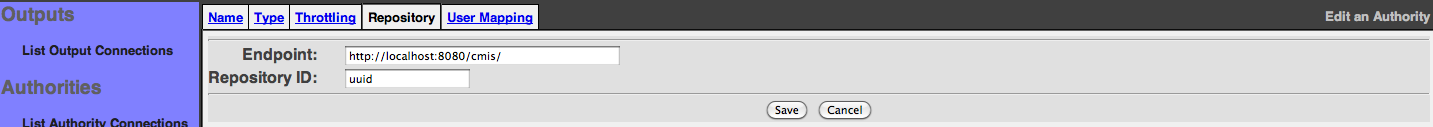

- CMIS Authority Connection

- EMC Documentum Authority Connection

- Generic Authority

- Generic Database Authority Connection

- LDAP Authority Connection

- OpenText LiveLink Authority Connection

- Autonomy Meridio Authority Connection

- Microsoft SharePoint ActiveDirectory Authority Connection

- Microsoft SharePoint Native Authority Connection

-

Repository Connection Types

- Alfresco Repository Connection

- Alfresco Webscript Repository Connection

- CMIS Repository Connection

- EMC Documentum Repository Connection

- Dropbox Repository Connection

- Individual Email Repository Connection

- IBM FileNet P8 Repository Connection

- Generic WGET-Compatible File System Repository Connection

- Generic Connector

- Generic Database Repository Connection

- Google Drive Repository Connection

- HDFS Repository Connection (WGET compatible)

- Jira Repository Connection

- OpenText LiveLink Repository Connection

- Autonomy Meridio Repository Connection

- Generic RSS Repository Connection

- Microsoft SharePoint Repository Connection

- Generic Web Repository Connection

- Windows Share/DFS Repository Connection

- Wiki Repository Connection

- Notification Connection Types

Overview

This manual is intended for an end-user of ManifoldCF. It is assumed that the Framework has been properly installed, either by you or by a system integrator, with all required services running and desired connection types properly registered. If you think you need to know how to do that yourself, please visit the "Developer Resources" page.

Most of this manual describes how to use the ManifoldCF user interface. On a standard ManifoldCF deployment, you would reach that interface by giving your browser a URL something like this: http://my-server-name:8345/mcf-crawler-ui. This will, of course, differ from system to system. Please contact your system administrator to find out what URL is appropriate for your environment.

The ManifoldCF UI has been tested with Firefox and various incarnations of Internet Explorer. If you use another browser, there is a small chance that the UI will not work properly. Please let your system integrator know if you find any browser incompatibility problems.

When you enter the Framework user interface the first time, you will first be asked to log in:

Enter the login user name and password for your system. By default, the user name is "admin" and the password is "admin", although your system administrator can (and should) change this. Then, click the "Login" button. If you entered the correct credentials, you should see a screen that looks something like this:

On the left, there are menu options you can select. The main pane on the right shows a welcome message, but depending on what you select on the left, the contents of the main pane will change. Before you try to accomplish anything, please take a moment to read the descriptions below of the menu selections, and thus get an idea of how the Framework works as a whole.

Defining Output Connections

The Framework UI's left-side menu contains a link for listing output connections. An output connection is a connection to a system or place where documents fetched from various repositories can be written to. This is often a search engine.

All jobs must specify an output connection. You can create an output connection by clicking the "List Output Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing output connections listed. If there are already output connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new output connection, click the "Add new output connection" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your output connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all output connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of output connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of output connection type are described in separate sections below.

After you choose an output connection type, click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every output connection has a "Throttling" tab. The tab looks like this:

On this tab, you can specify only one thing: how many open connections are allowed at any given time to the system the output connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for greater overall throughput. The default value is 10, which may not be optimal for all types of output connections. Please refer to the section of the manual describing your output connection type for more precise recommendations.

Please refer to the section of the manual describing your chosen output connection type for a description of the tabs appropriate for that connection type.

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the output connection will work correctly.

Also note that there are five buttons along the bottom of the display: "Refresh", "Edit", "Delete", "Re-index all associated documents", and "Remove all associated records". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the output connection, and updates the connection status. Use this button when you have made changes to the external system your output connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of output connections. Note that ManifoldCF will not allow you to delete a connection that is being referenced by a job.

The "Re-index all associated documents" button will nullify the recorded versions of all documents currently indexed via this connection. This is not a button you would use often. Click it when you have changed the configuration of whatever system the output connection is describing, and therefore all documents will eventually need to be reindexed.

The "Remove all associated documents" button will remove from ManifoldCF all knowledge that any indexing has taken place at all to this connection. This is also not a button you would use often. Click it when you have removed the entire index that the output connection describes from the target repository.

Defining Transformation Connections

The Framework UI's left-side menu contains a link for listing transformation connections. A transformation connection is a connection to an engine where documents fetched from various repositories can be manipulated. This typically involves metadata extraction or mapping.

A job does not need to specify any transformation connections. In many cases, the final destination search engine has an included data conversion pipeline. But in the case where such data extraction and conversion is not available, ManifoldCF provides a way of taking care of it internally.

You can create a transformation connection by clicking the "List Transformation Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing transformation connections listed. If there are already transformation connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new transformation connection, click the "Add new transformation connection" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your transformation connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all transformation connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of transformation connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of transformation connection type are described in separate sections below.

After you choose a transformation connection type, click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every transformation connection has a "Throttling" tab. The tab looks like this:

On this tab, you can specify only one thing: how many open connections are allowed at any given time to the system the transformation connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for greater overall throughput. The default value is 10, which may not be optimal for all types of output connections. Please refer to the section of the manual describing your transformation connection type for more precise recommendations.

Please refer to the section of the manual describing your chosen transformation connection type for a description of the tabs appropriate for that connection type.

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the transformation connection will work correctly.

Also note that there are three buttons along the bottom of the display: "Refresh", "Edit", and "Delete". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the transformation connection, and updates the connection status. Use this button when you have made changes to the external system your transformation connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of transformation connections. Note that ManifoldCF will not allow you to delete a connection that is being referenced by a job.

Defining Authority Groups

The Framework UI's left-side menu contains a link for listing authority groups. An authority group is a collection of authorities that all cooperate to furnish security for each document from repositories that you select. For example, a SharePoint 2010 repository with the Claims Based authorization feature enabled may contain documents that are authorized by SharePoint itself, by Active Directory, and by others. Documents from such a SharePoint repository would therefore refer to a authority group which would have a SharePoint native authority, a SharePoint Active Directory authority, and other SharePoint claims based authorities as members. But most of the time, an authority group will consist of a single authority that is appropriate for the repository the authority group is meant to secure.

Since you need to select an authority group when you define an authority connection, you should define your authority groups before setting up your authority connections. If you don't have any authority groups defined, you cannot create authority connections at all. But if you select the wrong authority group when setting up your authority connection, you can go back later and change your selection.

It is also a good idea to define your authority groups before creating any repository connections, since each repository connection will also need to refer back to an authority group in order to secure documents. While it is possible to change the relationship between a repository connection and its authority group after-the-fact, in practice such changes may cause many documents to be reindexed the next time an associated job is run.

You can create an authority group by clicking the "List Authority Groups" link in the left-side navigation menu. When you do this, the following screen will appear:

If there are already authority groups, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new authority group, click the "Add a new authority group" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your authority group. For authority groups, there is only ever one tab, the "Name" tab.

Give your authority group a name and a description. Remember that all authority group names must be unique, and cannot be changed after the authority group is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Save" button. You must click the "Save" button when you are done in order to create or update your authority group. If you click "Cancel" instead, the new authority group will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

After you save your authority group, a summary screen will be displayed that describes the group, and you can proceed on to create any authority connections that belong to the authority group, or repository connections that refer to the authority group.

Defining Repository Connections

The Framework UI's left-hand menu contains a link for listing repository connections. A repository connection is a connection to the repository system that contains the documents that you are interested in indexing.

All jobs require you to specify a repository connection, because that is where they get their documents from. It is therefore necessary to create a repository connection before indexing any documents.

A repository connection also may have an associated authority group. This specified authority group determines the security environment in which documents from the repository connection are attached. While it is possible to change the specified authority group for a repository connection after a crawl has been done, in practice this will require all documents associated with that repository connection be reindexed in order to be searchable by anyone. Therefore, we recommend that you set up your desired authority group before defining your repository connection.

You can create a repository connection by clicking the "List Repository Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing repository connections listed. If there are already repository connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new repository connection, click the "Add a new connection" link at the bottom. The following screen will then appear:

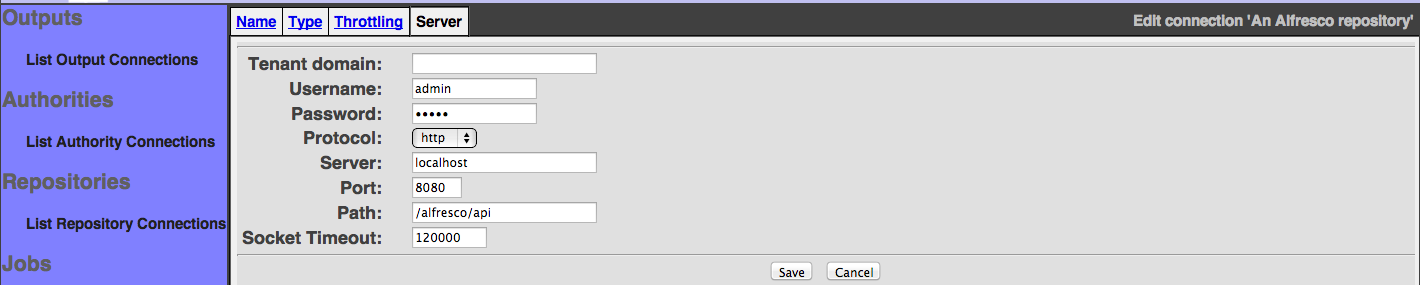

The tabs across the top each present a different view of your repository connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all repository connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of repository connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of repository connection type are described in this document in separate sections below.

You may also at this point select the authority group to use to secure all documents fetched from this repository with. You do not need to define your authority group's authority connections before doing this step, but you will not be able to search for your documents after indexing them until you do.

After you choose the desired repository connection type and an authority group (if desired), click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create or update your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every repository connection has a "Throttling" tab. The tab looks like this:

On this tab, you can specify two things. The first is how many open connections are allowed at any given time to the system the repository connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for smaller average search latency. The default value is 10, which may not be optimal for all types of repository connections. Please refer to the section of the manual describing your authority connection type for more precise recommendations. The second specifies how rapidly, on average, the crawler will fetch documents via this connection.

Each connection type has its own notion of "throttling bin". A throttling bin is the name of a resource whose access needs to be throttled. For example, the Web connection type uses a document's server name as the throttling bin associated with the document, since (presumably) it will be access to each individual server that will need to be throttled independently.

On the repository connection "Throttling" tab, you can specify an unrestricted number of throttling descriptions. Each throttling description consists of a regular expression that describes a family of throttling bins, plus a helpful description, plus an average number of fetches per minute for each of the throttling bins that matches the regular expression. If a given throttling bin matches more than one throttling description, the most conservative fetch rate is chosen.

The simplest regular expression you can use is the empty regular expression. This will match all of the connection's throttle bins, and thus will allow you to specify a default throttling policy for the connection. Set the desired average fetch rate, and click the "Add" button. The throttling tab will then appear something like this:

If no throttle descriptions are added, no fetch-rate throttling will be performed.

Please refer to the section of the manual describing your chosen repository connection type for a description of the tabs appropriate for that connection type.

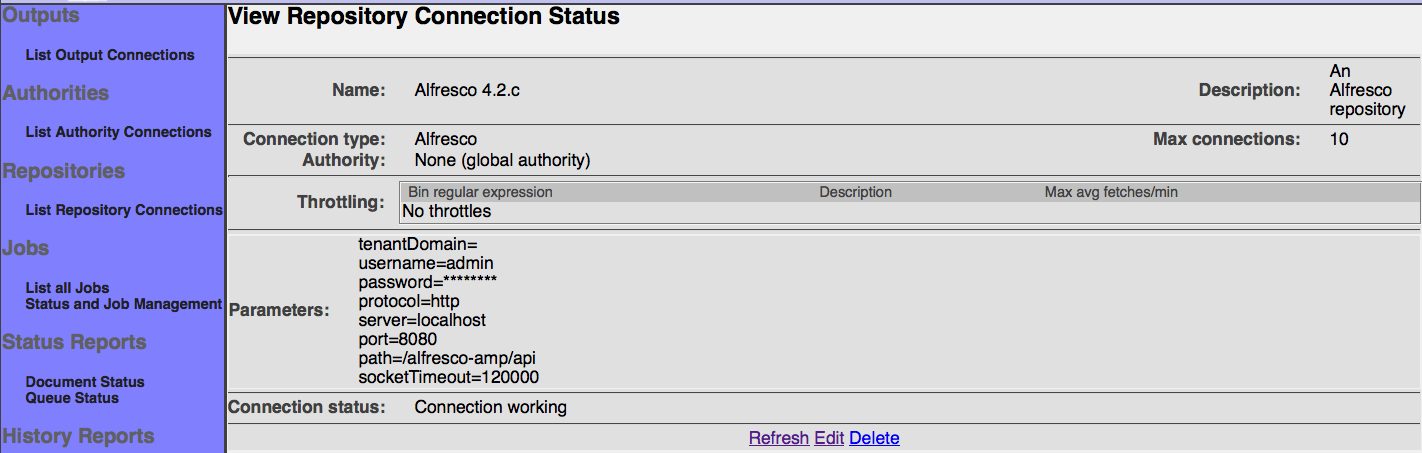

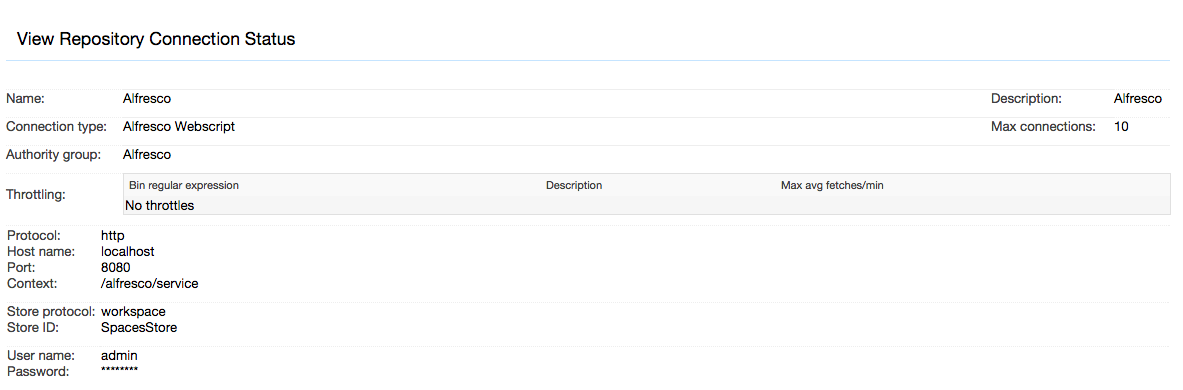

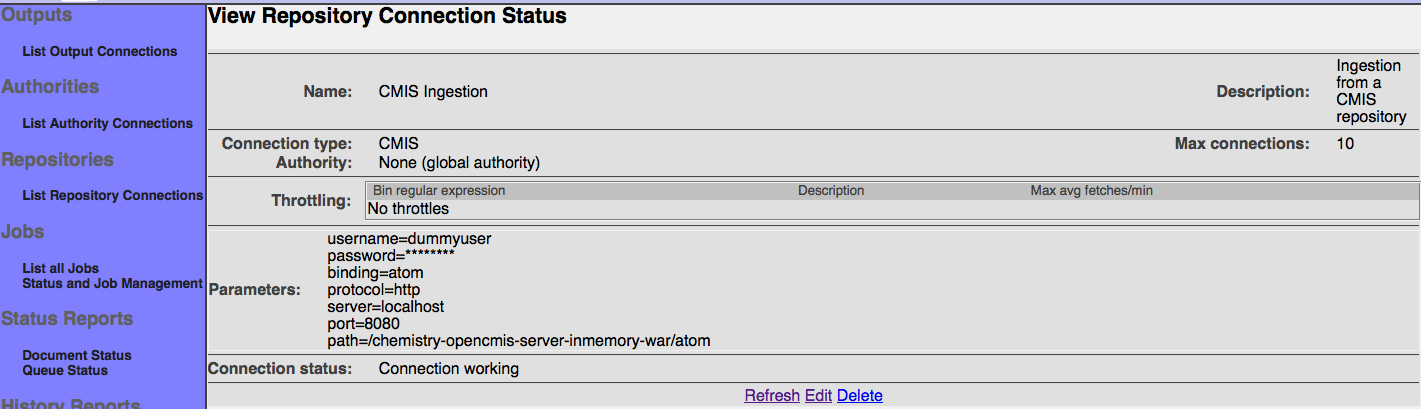

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the repository connection will work correctly.

Also note that there are four buttons along the bottom of the display: "Refresh", "Edit", "Delete", and "Clear all related history". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the repository connection, and updates the connection status. Use this button when you have made changes to the external system your repository connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of repository connections. Note that ManifoldCF will not allow you to delete a connection that is being referenced by a job.

The "Clear all related history" button will remove all history data associated with the current repository connection. This is not a button you would use often. History data is used to construct reports, such as the "Simple History" report. It is valuable as a diagnostic aid to understand what the crawler has been doing. There is an automated way of configuring ManifoldCF to remove history that is older than a specified interval before the current time. But if you want to remove all the history right away, this button will do that.

Defining Notification Connections

The Framework UI's left-side menu contains a link for listing notification connections. A notification connection is a connection to an engine that generates notification messages, such as email or text messages, specifically to note the end or unexpected termination of a job.

Jobs may specify one or more notification connections. You can create a notification connection by clicking the "List Notification Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing notification connections listed. If there are already notification connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new notification connection, click the "Add new notification connection" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your notification connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all notification connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of notification connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of notification connection type are described in separate sections below.

After you choose a notification connection type, click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every notification connection has a "Throttling" tab. The tab looks like this:

On this tab, you can specify only one thing: how many open connections are allowed at any given time to the system the notification connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for greater overall throughput. The default value is 10, which may not be optimal for all types of notificaiton connections. Please refer to the section of the manual describing your notification connection type for more precise recommendations.

Please refer to the section of the manual describing your chosen notification connection type for a description of the tabs appropriate for that connection type.

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the output connection will work correctly.

Also note that there are three buttons along the bottom of the display: "Refresh", "Edit", and "Delete". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the notification connection, and updates the connection status. Use this button when you have made changes to the external system your output connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of notification connections. Note that ManifoldCF will not allow you to delete a connection that is being referenced by a job.

Defining User Mapping Connections

The Framework UI's left-side menu contains a link for listing user mapping connections. A user mapping connection is a connection to a system that understands how to map a user name into a different user name. For example, if you want to enforce document security using LiveLink, but you have only an Active Directory user name, you will need to map the Active Directory user name to a corresponding LiveLink one, before finding access tokens for it using the LiveLink Authority.

Not all user mapping connections need to access other systems in order to be useful. ManifoldCF, for instance, comes with a regular expression user mapper that manipulates a user name string using regular expressions alone. Also, user mapping is not needed for many, if not most, authorities. You will not need any user mapping connections if the authorities that you intend to create can all operate using the same user name, and that user name is in the form that will be made available to ManifoldCF's authority servlet at search time.

You should define your mapping connections before setting up your authority connections. An authority connections may specify a mapping connection that precedes it. For the same reason, it's also convenient to define your mapping connections in the order that you want to process the user name. If you don't manage to do this right the first time, though, there is no reason you cannot go back and fix things up.

You can create a mapping connection by clicking the "List User Mapping Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing mapping connections listed. If there are already mapping connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new mapping connection, click the "Add a new connection" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your mapping connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all mapping connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of mapping connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of mapping connection type included with ManifoldCF are described in separate sections below.

After you choose a mapping connection type, click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every mapping connection has a "Prerequisites" tab. This tab allows you to specify which mapping connection needs to be run before this one (if any). The tab looks like this:

Note: It is very important that you do not specify prerequisites in such a way as to create a loop. To make this easier, ManifoldCF will not display any user mapping connections in the pulldown which, if selected, would lead to a loop.

Every mapping connection has a "Throttling" tab. The tab looks like this:

On this tab, you can specify only one thing: how many open connections are allowed at any given time to the system the mapping connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for smaller average search latency. The default value is 10, which may not be optimal for all types of mapping connections. Please refer to the section of the manual describing your mapping connection type for more precise recommendations.

Please refer to the section of the manual describing your chosen mapping connection type for a description of the tabs appropriate for that connection type.

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the mapping connection will work correctly.

Also note that there are three buttons along the bottom of the display: "Refresh", "Edit", and "Delete". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the mapping connection, and updates the connection status. Use this button when you have made changes to the external system your mapping connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of mapping connections. Note that ManifoldCF will not allow you to delete a connection that is being referenced by another mapping connection or an authority connection.

Defining Authority Connections

The Framework UI's left-side menu contains a link for listing authority connections. An authority connection is a connection to a system that defines a particular security environment. For example, if you want to index some documents that are protected by Active Directory, you would need to configure an Active Directory authority connection.

Bear in mind that only specific authority connection types are compatible with a given repository connection type. Read the details of your desired repository type in this document in order to understand how it is designed to be used. You may not need an authority if you do not mind that portions of all the documents you want to index are visible to everyone. For web, RSS, and Wiki crawling, this might be the situation. Most other repositories have some native security mechanism, however.

You can create an authority connection by clicking the "List Authority Connections" link in the left-side navigation menu. When you do this, the following screen will appear:

On a freshly created system, there may well be no existing authority connections listed. If there are already authority connections, they will be listed on this screen, along with links that allow you to view, edit, or delete them. To create a new authority connection, click the "Add a new connection" link at the bottom. The following screen will then appear:

The tabs across the top each present a different view of your authority connection. Each tab allows you to edit a different characteristic of that connection. The exact set of tabs you see depends on the connection type you choose for the connection.

Start by giving your connection a name and a description. Remember that all authority connection names must be unique, and cannot be changed after the connection is defined. The name must be no more than 32 characters long. The description can be up to 255 characters long. When you are done, click on the "Type" tab. The Type tab for the connection will then appear:

The list of authority connection types in the pulldown box, and what they are each called, is determined by your system integrator. The configuration tabs for each different kind of authority connection type are described in this document in separate sections below.

On this tab, you must also select the authority group that the authority connection you are creating belongs to. Select the appropriate authority group from the pulldown.

You also have the option of selecting a non-default authorization domain. An authorization domain describes which of possibly several user identities the authority connection is associated with. For example, a single user may have an Active Directory identity, a LiveLink identity, and a FaceBook identity. Your authority connection will be appropriate to only one of those identities. The list of specific authorization domains available is determined by your system integrator.

After you choose an authority connection type, the authority group, and optionally the authorization domain, click the "Continue" button at the bottom of the pane. You will then see all the tabs appropriate for that kind of connection appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create your connection. If you click "Cancel" instead, the new connection will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

Every authority connection has a "Prerequisites" tab. This tab allows you to specify which mapping connection needs to be run before this one (if any). The tab looks like this:

Every authority connection also has a "Throttling" tab. The tab looks like this:

On this tab, you can specify only one thing: how many open connections are allowed at any given time to the system the authority connection talks with. This restriction helps prevent that system from being overloaded, or in some cases exceeding its license limitations. Conversely, making this number larger allows for smaller average search latency. The default value is 10, which may not be optimal for all types of authority connections. Please refer to the section of the manual describing your authority connection type for more precise recommendations.

Please refer to the section of the manual describing your chosen authority connection type for a description of the tabs appropriate for that connection type.

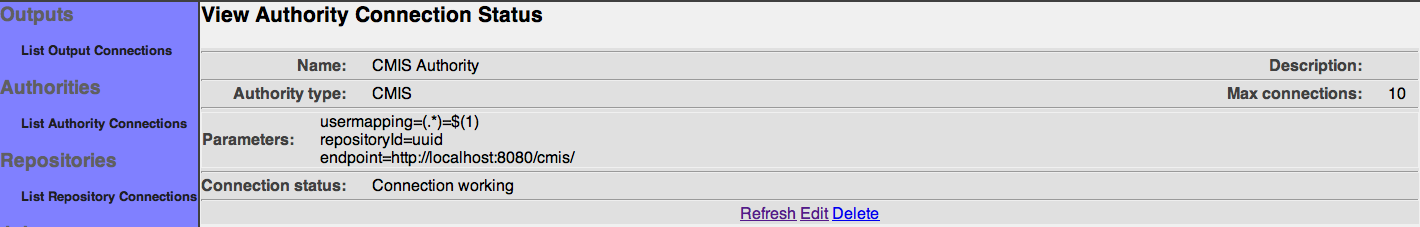

After you save your connection, a summary screen will be displayed that describes your connection's configuration. This looks something like this (although the details will differ somewhat based on what connection type you chose):

The summary screen contains a line where the connection's status is displayed. If you did everything correctly, the message "Connection working" will be displayed as a status. If there was a problem, you will see a connection-type-specific diagnostic message instead. If this happens, you will need to correct the problem, by either fixing your infrastructure, or by editing the connection configuration appropriately, before the authority connection will work correctly.

Also note that there are three buttons along the bottom of the display: "Refresh", "Edit", and "Delete". We'll go into the purpose for each of these buttons in turn.

The "Refresh" button simply reloads the view page for the authority connection, and updates the connection status. Use this button when you have made changes to the external system your authority connection is connected to that might affect whether the connection will succeed or not.

The "Edit" button allows you to go back and edit the connection parameters. Use this button if you want to change the connection's characteristics or specifications in any way.

The "Delete" button allows you to delete the connection. Use this button if you no longer want the connection to remain in the available list of authority connections.

Creating Jobs

A "job" in ManifoldCF is a description of a set of documents. The Framework's job is to fetch this set of documents come from a specific repository connection, transform them using zero or more transformation connections, and send them to a specific output connection. The repository connection that is associated with the job will determine exactly how this set of documents is described, and to some degree how they are indexed. The output connection associated with the job can also affect how each document is indexed, as will any transformation connections that are specified.

Every job is expected to be run more than once. Each time a job is run, it is responsible not only for sending new or changed documents to the output connection, but also for notifying the output connection of any documents that are no longer part of the set. Note that there are two ways for a document to no longer be part of the included set of documents: Either the document may have been deleted from the repository, or the document may no longer be included in the allowed set of documents. The Framework handles each case properly.

Deleting a job causes the output connection to be notified of deletion for all documents belonging to that job. This makes sense because the job represents the set of documents, which would otherwise be orphaned when the job was removed. (Some users make the assumption that a ManifoldCF job represents nothing more than a task, which is an incorrect assumption.)

Note that the Framework allows jobs that describe overlapping sets of documents to be defined. Documents that exist in more than one job are treated in the following special ways:

- When a job is deleted, the output connections are notified of deletion of documents belonging to that job only if they don't belong to another job

- The version of the document sent to an output connection depends on which job was run last

The subtle logic of overlapping documents means that you probably want to avoid this situation entirely, if it is at all feasible.

A typical non-continuous run of a job has the following stages of execution:

- Adding the job's new, changed, or deleted starting points to the queue ("seeding")

- Fetching documents, discovering new documents, and detecting deletions

- Removing no-longer-included documents from the queue

Jobs can also be run "continuously", which means that the job never completes, unless it is aborted. A continuous run has different stages of execution:

- Adding the job's new, changed, or deleted starting points to the queue ("seeding")

- Fetching documents, discovering new documents, and detecting deletions, while reseeding periodically

Note that continuous jobs cannot remove no-longer-included documents from the queue. They can only remove documents that have been deleted from the repository.

A job can independently be configured to start when explicitly started by a user, or to run on a user-specified schedule. If a job is set up to run on a schedule, it can be made to start only at the beginning of a schedule window, or to start again within any remaining schedule window when the previous job run completes.

There is no restriction in ManifoldCF as to how many jobs many running at any given time.

You create a job by first clicking on the "List All Jobs" link on the left-side menu. The following screen will appear:

You may view, edit, or delete any existing jobs by clicking on the appropriate link. You may also create a new job that is a copy of an existing job. But to create a brand-new job, click the "Add a new job" link at the bottom. You will then see the following page:

Give your job a name. Note that job names do not have to be unique, although it is probably less confusing to have a different name for each one. Then, click the "Connection" tab:

Now, you should select the repository connection name. Bear in mind that whatever you select cannot be changed after the job is saved the first time.

Add an output, or more than one, by selecting the output in the pulldown, selecting the prerequisite pipeline stage, and clicking the "Add output" button. Note that once the job is saved the first time, you cannot delete an output. But you can rearrange your document processing pipeline in most other ways whenever you want to, including adding or removing transformation connections.

If you do not have any transformation connections defined, you will not be given the option of inserting a transformation connection into the pipeline. But if you have transformation connections defined, and you want to include them in the document pipeline, you can select them from the transformation connection pulldown, type a description into the description box, and then click one of the "Insert before" buttons to insert it into the document pipeline.

If you do not have any notification connections defined, you will not be given the option of adding one or more notifications to the end of the job. But if you have notification connections defined, and you want to include them, you can select them from the notification connection pulldown, type a description into the description box, and then click the appropriate "Add" button to add it into the notification list.

You also have the opportunity to modify the job's priority and start method at this time. The priority controls how important this job's documents are, relative to documents from any other job. The higher the number, the more important it is considered for that job's documents to be fetched first. The start method is as previously described; you get a choice of manual start, starting on the beginning of a scheduling window, or starting whenever possible within a scheduling window.

Make your selections, and click "Continue". The rest of the job's tabs will now appear, and a "Save" button will also appear at the bottom of the pane. You must click the "Save" button when you are done in order to create or update your job. If you click "Cancel" instead, the new job will not be created. (The same thing will happen if you click on any of the navigation links in the left-hand pane.)

All jobs have a "Scheduling" tab. The scheduling tab allows you to set up schedule-related configuration information:

On this tab, you can specify the following parameters:

- Whether the job runs continuously, or scans every document once

- How long a document should remain alive before it is 'expired', and removed from the index

- The minimum interval before a document is re-checked, to see if it has changed

- The maximum interval before a document is re-checked, to see if it has changed

- How long to wait before reseeding initial documents

The last four parameters only make sense if a job is a continuously running one, as the UI indicates.

The other thing you can do on this time is to define an appropriate set of scheduling records. Each scheduling record defines some related set of intervals during which the job can run. The intervals are determined by the starting time (which is defined by the day of week, month, day, hour, and minute pulldowns), and the maximum run time in minutes, which determines when the interval ends. It is, of course, possible to select multiple values for each of the pulldowns, in which case you be describing a starting time that had to match at least one of the selected values for each of the specified fields.

Once you have selected the schedule values you want, click the "Add Scheduled Time" button:

The example shows a schedule where crawls are run on Saturday and Sunday nights at 2 AM, and run for no more than 4 hours.

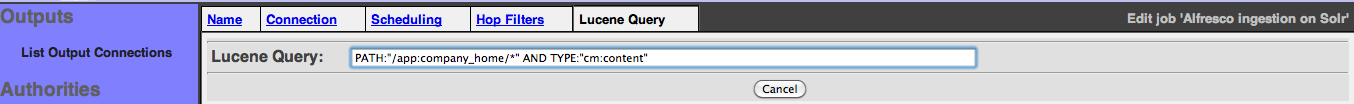

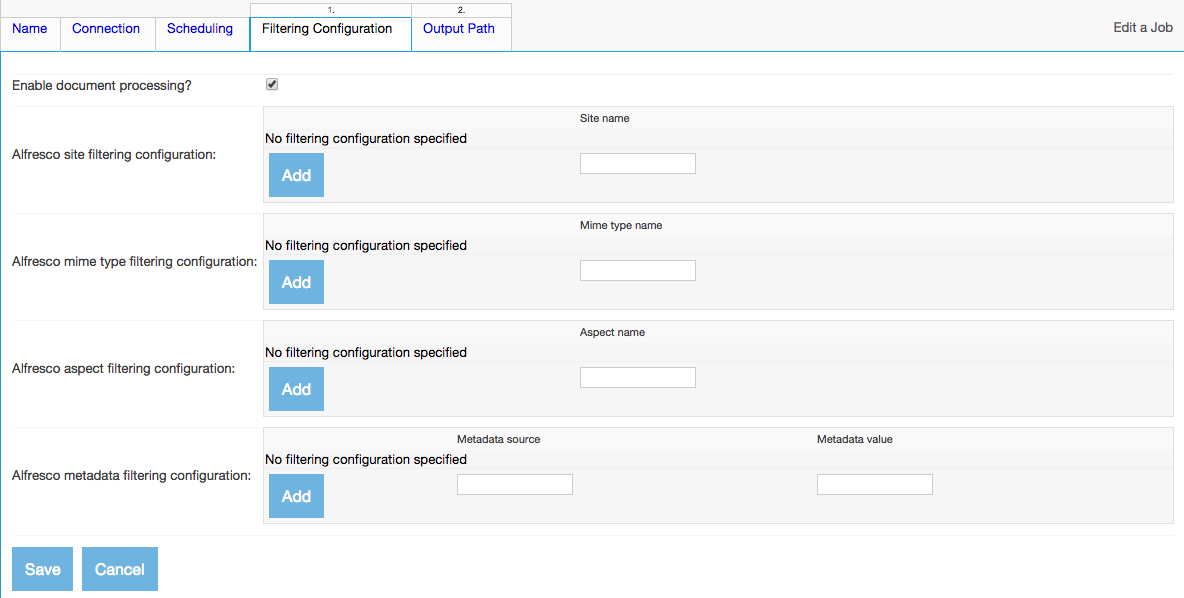

The rest of the job tabs depend on the types of the connections you selected. Please refer to the section of the manual describing the appropriate connection types corresponding to your chosen repository and output connections for a description of the job tabs that will appear for those connections.

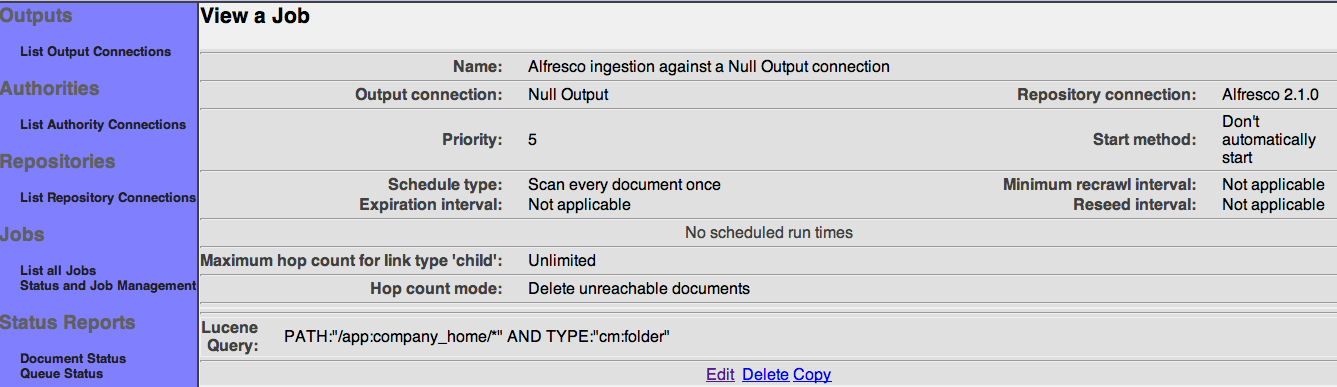

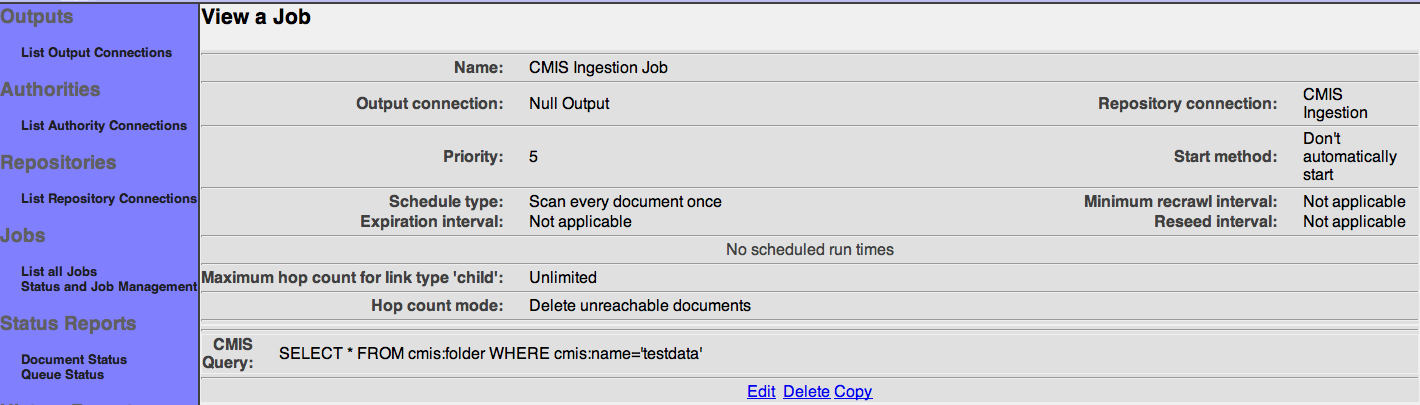

After you save your job, a summary screen will be displayed that describes your job's specification. This looks something like this (although the details will differ somewhat based on what connections you chose):

Also note that there are four buttons along the bottom of the display: "Edit", "Delete", "Copy", and "Reset seeding". We'll go into the purpose for each of these buttons in turn.

The "Edit" button allows you to go back and edit the job specification. Use this button if you want to change the job's details in any way.

The "Delete" button allows you to delete the job. Use this button if you no longer want the job to exist. Note that when you delete a job in ManifoldCF, all documents that were indexed using that job are removed from the index.

The "Copy" button allows you to edit a copy of the current job. Use this button if you want to create a new job that is based largely on the current job's specification. This can be helpful if you have many similar jobs to create.

The "Reset seeding" button will cause ManifoldCF to forget the seeding history of the job. Seeding is the process of discovering documents that have been added or modified. Clicking this button insures that ManifoldCF will examine all documents in the repository on the next crawl. This is not something that is done frequently; ManifoldCF is pretty good at managing this information itself, and will automatically do the same thing whenever a job specification is changed. Use this option if you've updated your connector software in a way that requires all documents to be re-examined.

Executing Jobs

You can follow what is going on, and control the execution of your jobs, by clicking on the "Status and Job Management" link on the left-side navigation menu. When you do, you might see something like this:

From here, you can click the "Refresh" link at the bottom of the main pane to see an updated status display, or you can directly control the job using the links in the leftmost status column. Allowed actions you may see at one point or another include:

- Start (start the job)

- Start minimal (start the job, but do only the minimal work possible)

- Abort(abort the job)

- Pause (pause the job)

- Resume (resume the job)

- Restart (equivalent to aborting the job, and starting it all over again)

- Restart minimal (equivalent to aborting the job, and starting it all over again, doing only the minimal work possible)

The columns "Documents", "Active", and "Processed" have very specific means as far as documents in the job's queue are concerned. The "Documents" column counts all the documents that belong to the job. The "Active" column counts all of the documents for that job that are queued up for processing. The "Processed" column counts all documents that are on the queue for the job that have been processed at least once in the past.

Using the "minimal" variant of the listed actions will perform the minimum possible amount of work, given the model that the connection type for the job uses. In some cases, this will mean that additions and modifications are indexed, but deletions are not detected. A complete job run is usually necessary to fully synchronize the target index with the repository contents.

Status Reports

Every job in ManifoldCF describes a set of documents. A reference to each document in the set is kept in a job-specific queue. It is sometimes valuable for diagnostic reasons to examine this queue for information. The Framework UI has several canned reports which do just that.

Each status report allows you to select what documents you are interested in from a job's queue based on the following information:

- The job

- The document identifier

- The document's status and state

- When the document is scheduled to be processed next

Document Status

A document status report simply lists all matching documents from within the queue, along with their state, status, and planned future activity. You might use this report if you were trying to figure out (for example) whether a specific document had been processed yet during a job run.

Click on the "Document Status" link on the left-hand menu. You will see a screen that looks something like this:

Select the desired connection. You may also select the desired document state and status, as well as specify a regular expression for the document identifier, if you want. Then, click the "Continue" button:

Select the job whose documents you want to see, and click "Continue" once again. The results will display:

You may alter the criteria, and click "Go" again, if you so choose. Or, you can alter the number of result rows displayed at a time, and click "Go" to redisplay. Finally, you can page up and down through the results using the "Prev" and "Next" links.

Queue Status

A queue status report is an aggregate report that counts the number of occurrences of documents in specified classes. The classes are specified as a grouping within a regular expression, which is matched against all specified document identifiers. The results that are displayed are counts of documents. There will be a column for each combination of document state and status.

For example, a class specification of "()" will produce exactly one result row, and will provide a count of documents that are in each state/status combination. A class description of "(.*)", on the other hand, will create one row for each document identifier, and will put a "1" in the column representing state and status of that document, with a "0" in all other column positions.

Click the "Queue Status" link on the left-hand menu. You will see a screen that looks like this:

Select the desired connection. You may also select the desired document state and status, as well as specify a regular expression for the document identifier, if you want. You will probably want to change the document identifier class from its default value of "(.*)". Then, click the "Continue" button:

Select the job whose documents you want to see, and click "Continue" once again. The results will display:

You may alter the criteria, and click "Go" again, if you so choose. Or, you can alter the number of result rows displayed at a time, and click "Go" to redisplay. Finally, you can page up and down through the results using the "Prev" and "Next" links.

History Reports

For every repository connection, ManifoldCF keeps a history of what has taken place involving that connection. This history includes both events that the framework itself logs, as well as events that a repository connection or output connection will log. These individual events are categorized by "activity type". Some of the kinds of activity types that exist are:

- Job start

- Job end

- Job abort

- Various connection-type-specific read or access operations

- Various connection-type-specific output or indexing operations

This history can be enormously helpful in understand how your system is behaving, and whether or not it is working properly. For this reason, the Framework UI has the ability to generate several canned reports which query this history data and display the results.

All history reports allow you to specify what history records you are interested in including. These records are selected using the following criteria:

- The repository connection name

- The activity type(s) desired

- The start time desired

- The end time desired

- The identifier(s) involved, specified as a regular expression

- The result(s) produced, specified as a regular expression

The actual reports available are designed to be useful for diagnosing both access issues, and performance issues. See below for a summary of the types available.

Simple History Reports

As the name suggests, a simple history report does not attempt to aggregate any data, but instead just lists matching records from the repository connection's history. These records are initially presented in most-recent-first order, and include columns for the start and end time of the event, the kind of activity represented by the event, the identifier involved, the number of bytes involved, and the results of the event. Once displayed, you may choose to display more or less data, or reorder the display by column, or page through the data.

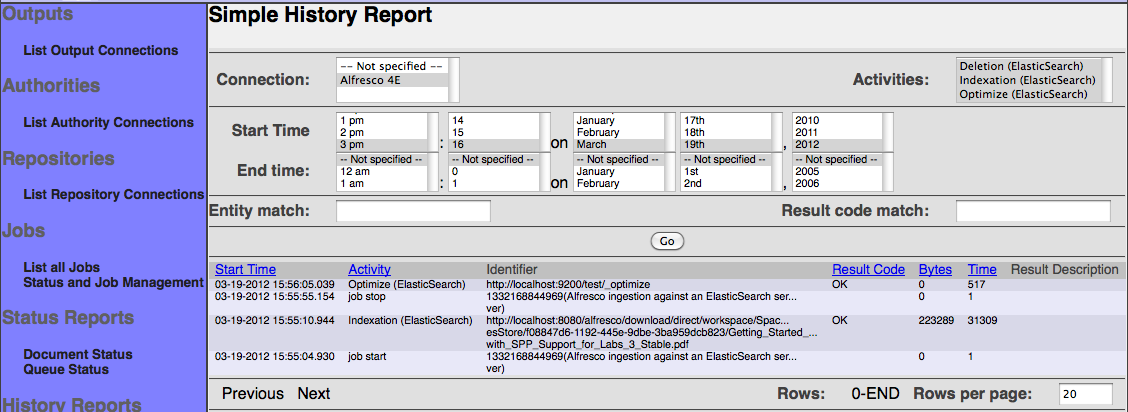

To get started, click on the "Simple History" link on the left-hand menu. You will see a screen that looks like this:

Now, select the desired repository connection from the pulldown in the upper left hand corner. If you like, you can also change the specified date/time range, or specify an identifier regular expression or result code regular expression. By default, the date/time range selects all events within the last hour, while the identifier regular expression and result code regular expression matches all identifiers and result codes.

Next, click the "Continue" button. A list of pertinent activities should then appear in a pulldown in the upper right:

You may select one or more activities that you would like a report on. When you are done, click the "Go" button. The results will appear, ordered by time, most recent event first:

You may alter the criteria, and click "Go" again, if you so choose. Or, you can alter the number of result rows displayed at a time, and click "Go" to redisplay. Finally, you can page up and down through the results using the "Prev" and "Next" links.

Please bear in mind that the report redisplays whatever matches each time you click "Go". So, if your time interval goes from an hour beforehand to "now", and you have activity happening, you will see different results each time "Go" is clicked.

Maximum Activity Reports

A maximum activity report is an aggregate report used primarily to display the maximum rate that events occur within a specified time interval. MHL

Maximum Bandwidth Reports

A maximum bandwidth report is an aggregate report used primarily to display the maximum byte rate that pertains to events occurring within a specified time interval. MHL

Result Histogram Reports

A result histogram report is an aggregate report is used to count the occurrences of each kind of matching result for all matching events. MHL

A Note About Credentials

If any of your selected connection types require credentials, you may find it necessary to approach your system administrator to obtain an appropriate set. System administrators are often reluctant to provide accounts and credentials that have any more power than is utterly necessary, and sometimes not even that. Great care has been taken in the development of all connection types to be sure they require no more privilege than is utterly necessary. If a security-related warning appears when you view a connection's status, you must inform the system administrator that the credentials are inadequate to allow the connection to accomplish its task, and work with him/her to correct the problem.

Output Connection Types

Amazon Cloud Search Output Connection

The Amazon Cloud Search output connection type send documents to a specific path within a specified Amazon Cloud Search instance. The connection type furthermore "batches" documents to reduce cost as much as is reasonable. As a result, some specified documents may be sent at the end of a job run, rather than at the time they would typically be indexed.

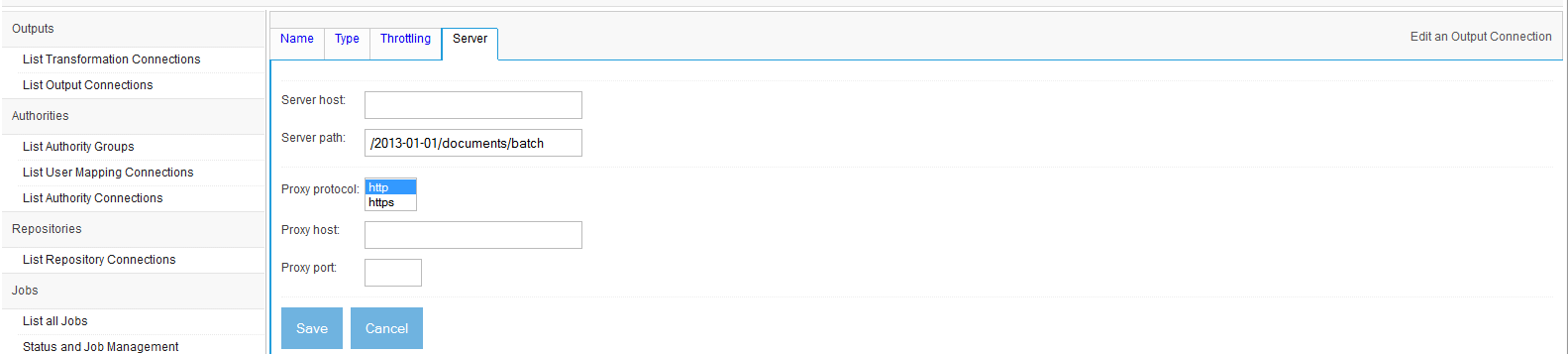

The connection configuration information for the Amazon Cloud Search Output Connection type includes one additional tab: the "Server" tab. This tab looks like this:

You must supply the "Server host" field in order for the connection to work.

The Amazon Cloud Search Output Connection type does not contribute any tabs to a job definition.

The Amazon Cloud Search Output Connection type can only accept text content that is encoded in a UTF-8-compatible manner. It is highly recommended to use the Tika Content Extractor in the pipeline prior to the Amazon Cloud Search Output Connection type in order to convert documents to an indexable form.

In order to successfully index ManifoldCF documents in Amazon Cloud Search, you will need to describe a Cloud Search schema for receiving them. The fields that the Amazon Cloud Search output connection type sends are those that it gets specifically from the document as it comes through the ManifoldCF pipeline, with the addition of two hard-wired fields: "f_bodytext", containing the document body content, and "document_uri", containing the document's URI. You may also need to use the Metadata Adjuster transformation connection type to make sure that document metadata sent to Amazon Cloud Search agree with the schema you have defined there. Please refer to this document for details of how to set up an Amazon Cloud Search schema.

CMIS Output Connection

The CMIS Output Connection type allows you to migrate content to any CMIS-compliant repository.

By default each CMIS Connection manages a single CMIS repository, this means that if you have multiple CMIS repositories exposed by a single endpoint, you need to create a specific connection for each CMIS repository.

CMIS repository documents are typically secured by using the CMIS Authority Connection type. This authority type, however, does not have access to user groups, since there is no such functionality in the CMIS specification at this time. As a result, most people only use the CMIS connection type in an unsecured manner.

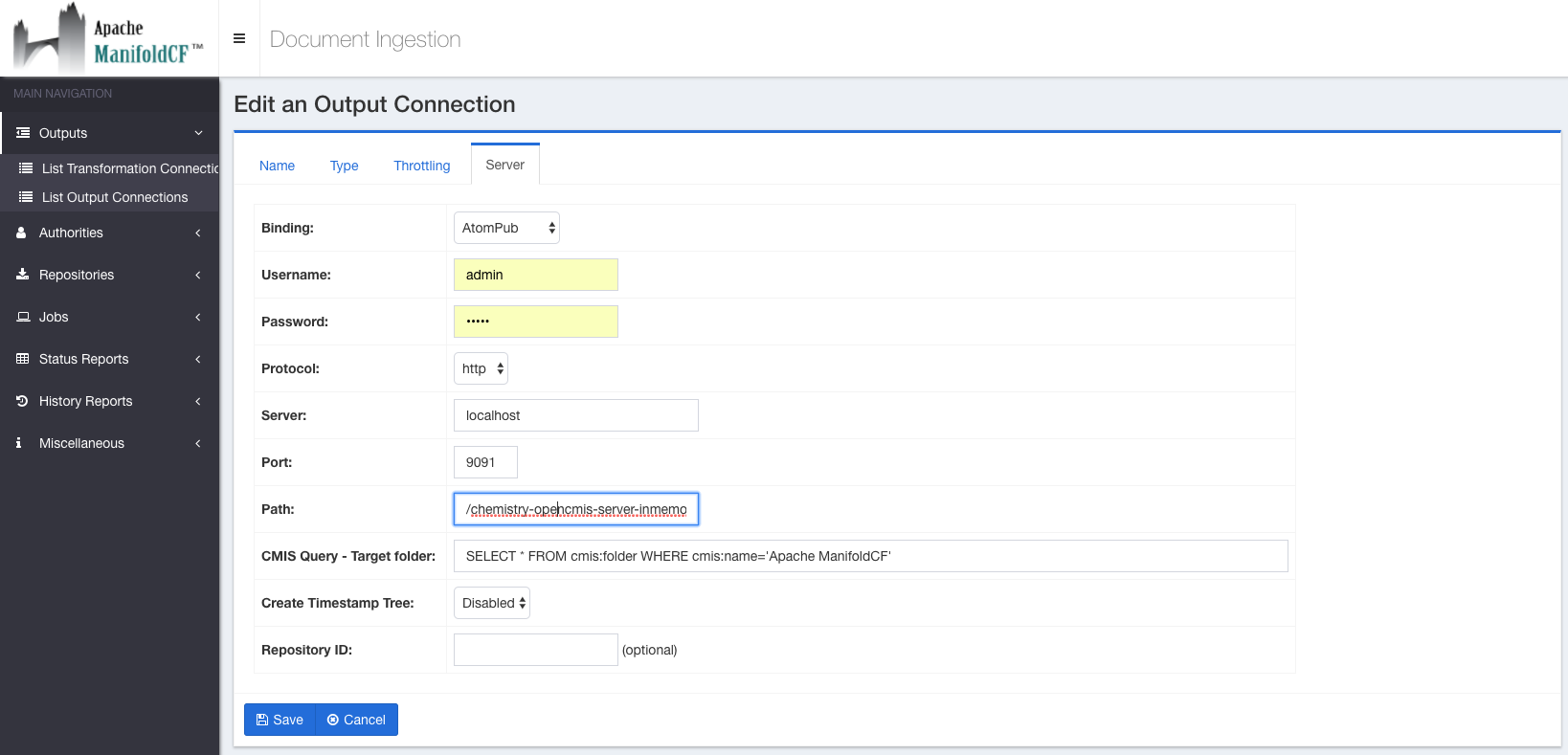

A CMIS Output connection has the following configuration parameters on the output connection editing screen:

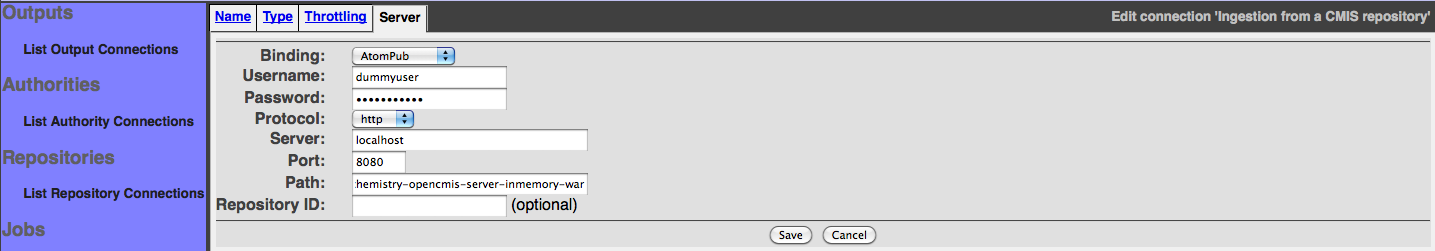

Select the correct CMIS binding protocol (AtomPub or Web Services) and enter the correct username, password and the endpoint to reference the CMIS document server services.

The endpoint consists of the HTTP protocol, hostname, port and the context path of the CMIS service exposed by the CMIS server:

http://HOSTNAME:PORT/CMIS_CONTEXT_PATH

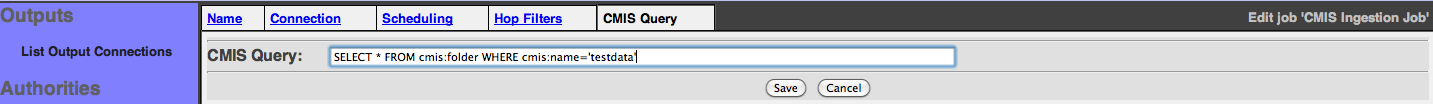

The CMIS Query must be provided to select your own drop zone in the target folder that should be an existent CMIS folder.

By default the crawler will replicate the same source path structure for each content in that target folder.

Considering to have your contents in your source repository inside the following path:

/MySourceRepo/Invoices

And supposing to have configured your CMIS Output Connection with the default value of the CMIS Query for your target folder:

SELECT * FROM cmis:folder WHERE cmis:name='Apache ManifoldCF'

All the migrated contents will be dropped in the following target CMIS folder:

/path/to/your/Apache ManifoldCF/MySourceRepo/Invoices

Optionally you can provide the repository ID to select one of the exposed CMIS repository, if this parameter is null the CMIS Connector will consider the first CMIS repository exposed by the CMIS server.

Note that, in a CMIS system, a specific binding protocol has its own context path, this means that the endpoints are different:

for example the endpoint of the AtomPub binding exposed by the actual version of the InMemory Server provided by the OpenCMIS framework is the following:

http://localhost:8080/chemistry-opencmis-server-inmemory-war-0.5.0-SNAPSHOT/atom

The Web Services binding is exposed using a different endpoint:

http://localhost:8080/chemistry-opencmis-server-inmemory-war-0.5.0-SNAPSHOT/services/RepositoryService

ElasticSearch Output Connection

The ElasticSearch Output Connection type allows ManifoldCF to submit documents to an ElasticSearch instance, via the XML over HTTP API. The connector has been designed to be as easy to use as possible.

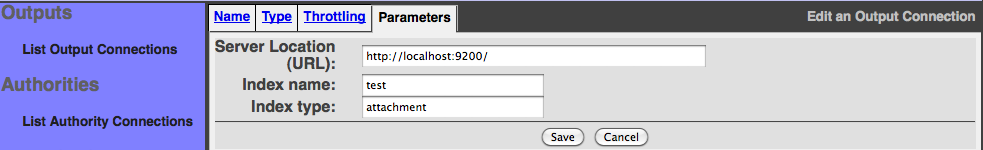

After creating an ElasticSearch output connection, you have to populate the parameters tab. Fill in the fields according your ElasticSearch configuration. Each ElasticSearch output connector instance works with one index. To work with multiple indexes, just create one output connector for each index.

The parameters are:

- Server location: An URL that references your ElasticSearch instance. The default value (http://localhost:9200) is valid if your ElasticSearch instance runs on the same server than the ManifoldCF instance.

- Index name: The connector will populate the index defined here.

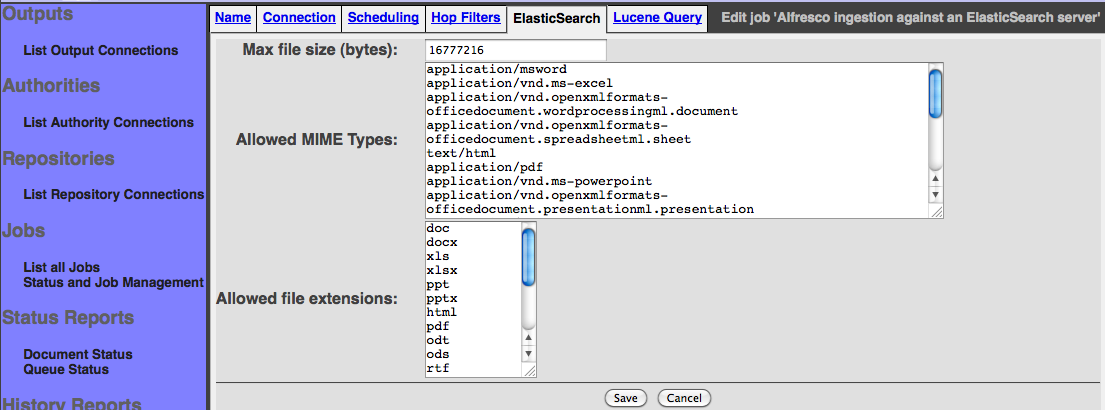

Once you created a new job, having selected the ElasticSearch output connector, you will have the ElasticSearch tab. This tab let you:

- Fix the maximum size of a document before deciding to index it. The value is in bytes. The default value is 16MB.

- The allowed mime types. Warning it does not work with all repository connectors.

- The allowed file extensions. Warning it does not work with all repository connectors.

In the history report you will be able to monitor all the activites. The connector supports three activites: Document ingestion (Indexation), document deletion and index optimization. The targeted index is automatically optimized when the job is ending.

You may also refer to ElasticSearch's user documentation. Especially important is the need to configure the ElasticSearch index mapping before you try to index anything. If you have not configured the ElasticSearch mapping properly, then the documents you send to ElasticSearch via ManifoldCF will not be parsed, and once you send a document to the index, you cannot fix this in ElasticSearch without discarding your index. Specifically, you will want a mapping that enables the attachment plug-in, for example something like this:

{

"attachment" :

{

"properties" :

{

"file" :

{

"type" : "attachment",

"fields" :

{

"title" : { "store" : "yes" },

"keywords" : { "store" : "yes" },

"author" : { "store" : "yes" },

"content_type" : {"store" : "yes"},

"name" : {"store" : "yes"},

"date" : {"store" : "yes"},

"file" : { "term_vector":"with_positions_offsets", "store":"yes" }

}

}

}

}

}

Obviously, you would want your mapping to have details consistent with your particular indexing task. You can change the mapping or inspect it using the curl tool, which you can download from http://curl.haxx.se. For example, to inspect the mapping for a version of ElasticSearch running locally on port 9200:

curl -XGET http://localhost:9200/index/_mapping

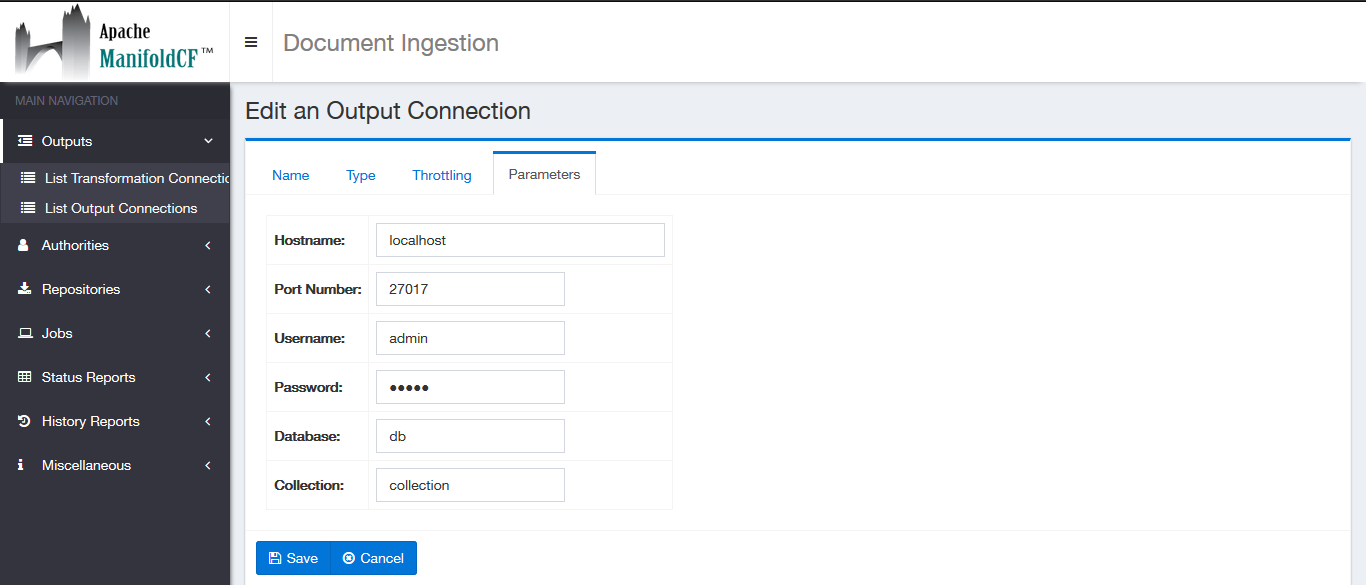

MongoDB Output Connection

The MongoDB Output Connection type allows you to store documents in a Mongodb instance.

By default each MongoDB Output Connection manages a single Mongodb collection, to work with multiple MongoDB collections(even if they exist in the same MongoDB instance), you need to create a specific connection for each MongoDB collection.

A MongoDB Output connection has the following configuration parameters on the output connection editing screen:

Specify the Hostname and Port Number corresponding to the target MongoDB instance to reference the MongoDB server(mongod) and enter the correct username, password as the credentials.

Provide a Database name and a Collection name to uniquely specify where the documents are to be migrated.

Note that if the specified Database or the specified Collection does not exist in the target MongoDB instance those will be created automatically.

File System Output Connection

The File System output connection type allows ManifoldCF to store documents in a local filesystem, using the conventions established by the Unix utility called wget. Documents stored by this connection type will not include any metadata or security information, but instead consist solely of a binary file.

The connection configuration information for the File System output connection type includes no additional tabs. There is an additional job tab, however, called "Output Path". The tab looks like this:

Fill in the path you want the connection type to use to write the documents to. Then, click the "Save" button.

HDFS Output Connection

The HDFS output connection type allows ManifoldCF to store documents in HDFS, using the conventions established by the Unix utility called wget. Documents stored by this connection type will not include any metadata or security information, but instead consist solely of a binary file.

The connection configuration information for the HDFS output connection type includes one additional tab: the "Server" tab. This tab looks like this:

Fill in the name node URI and the user name. Both are required.

For the HDFS output connection type, there is an additional job tab called "Output Path". The tab looks like this:

Fill in the path you want the connection type to use to write the documents to. Then, click the "Save" button.

MetaCarta GTS Output Connection

The MetaCarta GTS output connection type is designed to allow ManifoldCF to submit documents to an appropriate MetaCarta GTS search appliance, via the appliance's HTTP Ingestion API.

The connection type implicitly understands that GTS can only handle text, HTML, XML, RTF, PDF, and Microsoft Office documents. All other document types will be considered to be unindexable. This helps prevent jobs based on a GTS-type output connection from fetching data that is large, but of no particular relevance.

When you configure a job to use a GTS-type output connection, two additional tabs will be presented to the user: "Collections" and "Document Templates". These tabs allow per-job specification of these GTS-specific features.

More here later

Null Output Connection

The null output connection type is meant primarily to function as an aid for people writing repository connection types. It is not expected to be useful in practice.

The null output connection type simply logs indexing and deletion requests, and does nothing else. It does not have any special configuration tabs, nor does it contribute tabs to jobs defined that use it.

OpenSearchServer Output Connection

The OpenSearchServer Output Connection allow ManifoldCF to submit documents to an OpenSearchServer instance, via the XML over HTTP API. The connector has been designed to be as easy to use as possible.

After creating an OpenSearchServer ouput connection, you have to populate the parameters tab. Fill in the fields according your OpenSearchServer configuration. Each OpenSearchServer output connector instance works with one index. To work with muliple indexes, just create one output connector for each index.

The parameters are:

- Server location: An URL that references your OpenSearchServer instance. The default value (http://localhost:8080) is valid if your OpenSearchServer instance runs on the same server than the ManifoldCF instance.

- Index name: The connector will populate the index defined here.

- User name and API Key: The credentials required to connect to the OpenSearchServer instance. It can be left empty if no user has been created. The next figure shows where to find the user's informations in the OpenSearchServer user interface.

Once you created a new job, having selected the OpenSearchServer output connector, you will have the OpenSearchServer tab. This tab let you:

- Fix the maximum size of a document before deciding to index it. The value is in bytes. The default value is 16MB.

- The allowed mime types. Warning it does not work with all repository connectors.

- The allowed file extensions. Warning it does not work with all repository connectors.

In the history report you will be able to monitor all the activites. The connector supports three activites: Document ingestion (Indexation), document deletion and index optimization. The targeted index is automatically optimized when the job is ending.

You may also refer to the OpenSearchServer's user documentation.

Solr Output Connection

The Solr output connection type is designed to allow ManifoldCF to submit documents to either an appropriate Apache Solr instance, via the Solr HTTP API, or alternatively to a Solr Cloud cluster. The configuration parameters are initially set to appropriate default values for a stand-alone Solr instance.

When you create a Solr output connection, multiple configuration tabs appear. The first tab is the "Solr type" tab. Here you select whether you want your connection to communicate to a standalone Solr instance, or to a Solr Cloud cluster:

Select which kind of Solr installation you want to communicate with. Based on your selection, you can proceed to either the "Server" tab (if a standalone instance) or to the "ZooKeeper" tab (if a SolrCloud cluster).

The "Server" tab allows you to configure the HTTP parameters appropriate for communicating with a standalone Solr instance:

If your Solr setup is a standalone instance, fill in the fields according to your Solr configuration. The Solr connection type supports only basic authentication at this time; if you have this enabled, supply the credentials as requested on the bottom part of the form.

The "Zookeeper" tab allows your to configure the connection type to communicate with a Solr Cloud cluster:

Here, add each ZooKeeper instance in the SolrCloud cluster to the list of ZooKeeper instances. The connection comes preconfigured with "localhost" as being a ZooKeeper instance. You may delete this if it is not the case.

The next tab is the "Schema" tab, which allows you to specify the names of various Solr fields into which the Solr connection type will place built-in document metadata:

The most important of these is the document identifier field, which MUST be present for the connection type to function. This field will be used to uniquely identify the document within Solr, and will contain the document's URL. The Solr connection type will treat this field as being a unique key for locating the indexed document for further modification or deletion. The other Solr fields are optional, and largely self- explanatory.

The next tab is the "Arguments" tab, which allows you to specify arbitrary arguments to be sent to Solr:

Fill in the argument name and value, and click the "Add" button. Bear in mind that if you add an argument with the same name as an existing one, it will replace the existing one with the new specified value. You can delete existing arguments by clicking the "Delete" button next to the argument you want to delete.

Use this tab to specify any and all desired Solr update request parameters. You can, for instance, add update.chain=myChain to select a specific document processing pipeline/chain to use for processing documents. See the Solr documentation for more valid arguments.

The next tab is the "Documents" tab, which allows you to do document filtering based on size and mime types. By specifying a maximum document length in bytes, you can filter out documents which exceed that size (e.g. 10485760 which is equivalent to 10 MB). If you only want to add documents with specific mime types, you can enter them into the "included mime types" field (e.g. "text/html" for filtering out all documents but HTML). The "excluded mime types" field is for excluding documents with specific mime types (e.g. "image/jpeg" for filtering out JPEG images). The tab looks like:

The fifth tab is the "Commits" tab, which allows you to control the commit strategies. As well as committing documents at the end of every job, an option which is enabled by default, you may also commit each document within a certain time in milliseconds (e.g. "10000" for committing within 10 seconds). The commit within strategy will leave the responsibility to Solr instead of ManifoldCF. The tab looks like:

When you are done, don't forget to click the "Save" button to save your changes! When you do, a connection summary and status screen will be presented, which may look something like this:

Note that in this example, the Solr connection is not responding, which is leading to an error status message instead of "Connection working".

Transformation Connection Types

Allowed Documents

The Allowed Documents transformation filter is used to limit the documents that will be fetched and passed down the pipeline for indexing. The filter allows documents to be restricted by mime type, by extension, and by length.

It is important to note that these various methods of filtering rely on the upstream repository connection type to implement. Some repository connection types do not implement all of the available methods of filtering. For example, filtering by URL (and hence file extension) makes little sense in the context of a repository connection type whose URLs do not include a full file name.